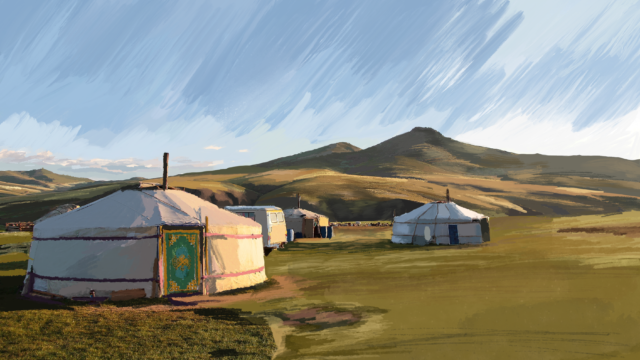

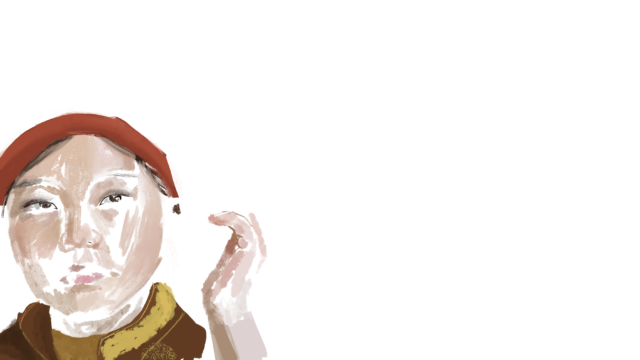

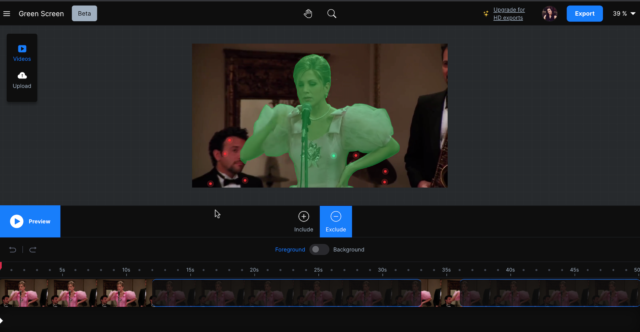

What will a text to image generator do with abstract language depicting love and pain?

I.

i remember when carmine rivers seeped from my

shins, tracing a route back to the bathtub. once,

i dreamt of bathing in my own bruises. my legs

still bleed whenever i miss you.

II.

who cares about subclavian

and carotid arteries. blood

is blood. everyone knows that

model hearts don’t look

like the real thing, they’re

all too red and stiff; genuine

myocardium is pink, fragile,

too fragile, disgusting, raw.

III.

It was dark. You were faceless. The air was stagnant. I was silent.

I didn’t touch you, but I knew you were warm.

We lay our bodies by the marsh, staring up at the sky,

silence slitting our throats. The darkness shrouds our bodies

like a pall. I wondered if two cadavers could kiss.