This is a little late, but what struck me so hard with the pieces we investigated on Facial Recognition is that all of this is here. It’s already in so much of our lives in so many ways. Another thing that struck me was the research behind (not the actual claim, unfortunately this is very much known to me) the paleness factor in how computers identify white faces better. You can see this happening even in Twitter’s auto-cropping system.

Category: 08-FaceReadings

fr0g.fartz-facereadings

Clearview.ai seems super sketchy and it’s scary how big their database is. It’s scary that a guy that seems like a douchebag is the only one that’s choosing who to control this technology. Where is government regulation when it’s reported that he is allowing stores like Walmart to access it? It seems like anybody with money can access Clearview.

It’s also crazy how much race has to do with facial recognition, and I really admire Joy Buolamwini for educating others. However, I am wondering how the fuck there are 1.6 thousand dislikes on her video compared 2.6 thousand likes. I don’t understand what could compel somebody to dislike this video.

bookooBread – faceReadings

- In the John Oliver video, I was really struck by the story of the Brown student getting falsely accused of a terrorist attack. With the current biases in facial recognition technology, it is likely that stories like this will continue to occur. Our law enforcement agencies currently want to pin crimes to minorities – and this technology will only help them do that.

- “Any face that deviates from the established norm will be harder to detect”- Joy Buolamwini –> This line sort of stuck with me. It is one of the points of the video and goes along with what I was saying before. The “norms” that exist in our culture are heavily rooted in white supremacy – so as Joy explains in the video – current facial technology accepts those conditions and uses them as norms.

YoungLee-Facereadings

John Oliver’s video more so addressed how facial recognition software can harm people’s right to privacy while the other sources addressed algorithmic bias. I think that there should be government regulation on facial recognition software so that it avoids improper use by everyday civilians and big corporations. Perhaps they should make government officials/police ask for a warrant from a judge to access the technology, similar to issuing a search warrant if there is probable cause.

I thought algorithmic bias was a very interesting topic. It reminded me of an incident that occurred a few years ago when Google accidentally categorized a black person as ‘gorillas’ in Google photos (Link: https://www.theverge.com/2018/1/12/16882408/google-racist-gorillas-photo-recognition-algorithm-ai ). I thought it was interesting how human kind’s own biases translated into software, which further proves the eminent issue.

rathesungod- facereadings

- In Joy’s TedTalk, I really appreciated how she’s bringing out the problems of racial inclusion within machine learning. When she was explaining her rules as to who, what, and why we code matters, it really highlighted how things should always be adjusting as technology develops more.

- The reality of how far machine learning as well as face recognition does scare me on how and what we think is real/fake. As John was talking about all the different cases that facial recognition has been used both positively and negatively, it made me think twice.

minniebzrg-facereadings

- It was sort of alarming that clearview.ai gathered over 3 billion images from public databases such as Instagram, Facebook, and Twitter to use for their facial recognition program. This means photographs from when I was a reckless teenager posting all of my daily activities are probably in their database.

- Algorithmic bias exists even in the judicial system. For example, a judge could decide how long an individual be imprisoned for based on machine learning.

- Instead of changing society or people, the better answer would be to go back and rewire the foundations of programming to remove any biases. It also matters whose hands these programs fall into.

shrugbread-facereadings

I found the clearview.ai coverage very interesting. Scraping the bottom of the barrel of the internet to identify every instance of an individual was scary, but also scary in a way where the worst of it can only be left up to our imagination. If a project like clearview.ai exists and comes under great scrutiny, then who is to say that they haven’t done more nefarious things unchecked, or a competitor to it has not.

I also found interesting the point made in the article “Against black inclusion in facial recognition” that law enforcement doesn’t need more tools to identify, surveil, and subsequently oppress black people. Treating facial recognition more as a time-bomb prompt to explode in the faces of oppressed people.

bumble_b – FaceReadings

In Nabil Hassein’s article “Against Black Inclusion in Facial Recognition”, they make this really interesting point: “This analysis clearly contradicts advocacy of ‘diversity and inclusion’ as the universal or even typical response to bias. Among the political class, ‘Black faces in high places’ have utterly failed to produce gains for the Black masses.” I think it’s really fascinating and sad that people have to look at a concept that statistically excludes a minority and say “Please keep it that way” because their inclusion would only be used as a deadly weapon against them… it just shows that anti-racism is way more complex of a system than “just make sure everyone is included and equal!”

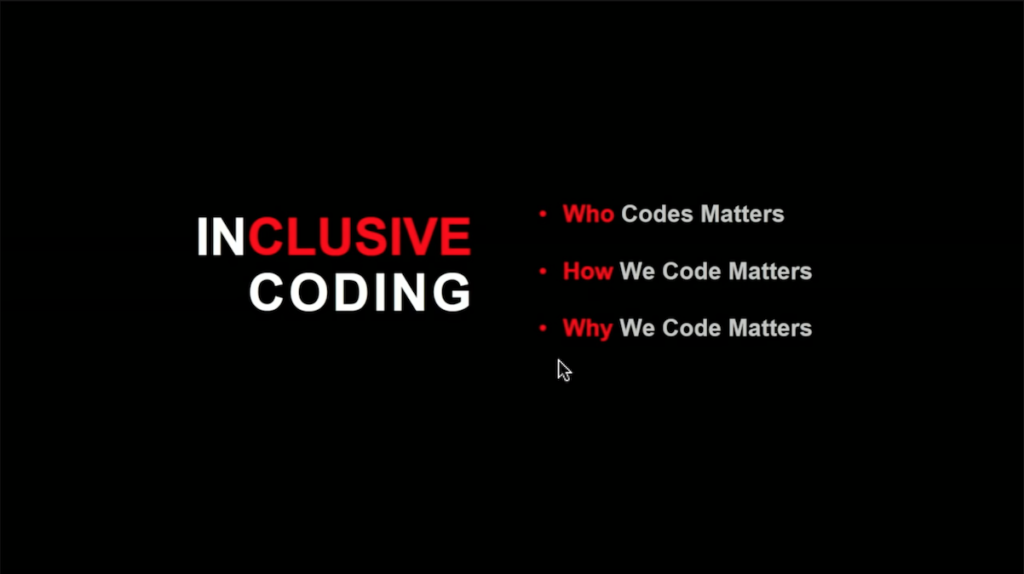

Also, I really liked this slide in Joy Buolamwini’s talk:

I think this is a really simple and powerful message. Yes, who is in the room when decisions are being made is essential for any company/organization to think about. How people make things and why they’re making them are also very important considerations. I’d go as far as to say that even if your “why am I making this?” is positively impactful, you need to consider how your code could be used if it got into the wrong hands, too.

Also, really quickly, the SkyNet thing in Last Week Tonight felt straight out of a dystopian movie. I mean, seriously? It made me laugh out loud in the most terrified/uncomfortable way.