Category: Deliverables-08

YoungLee – Situated Eye

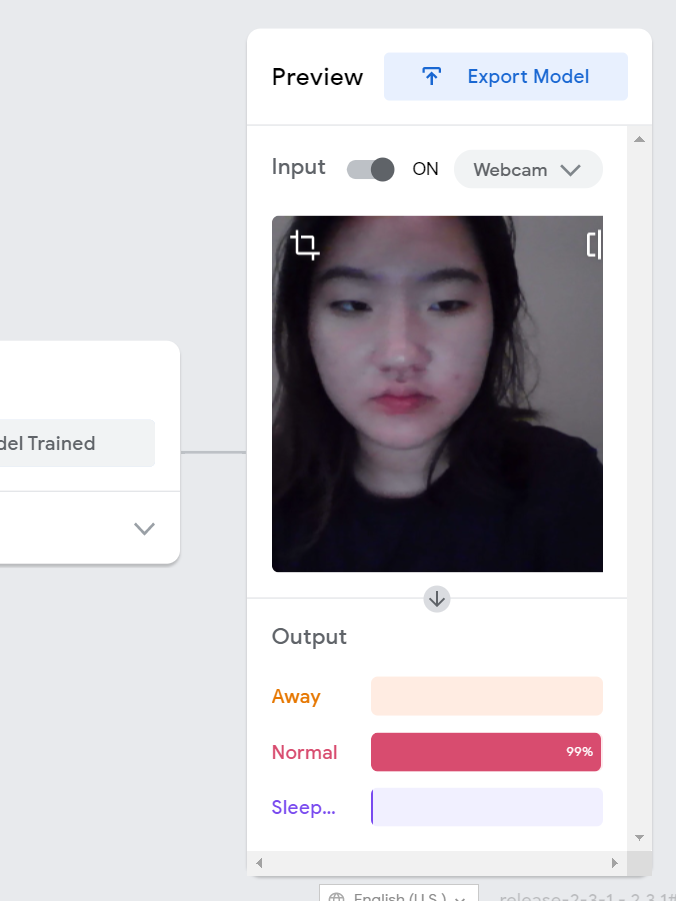

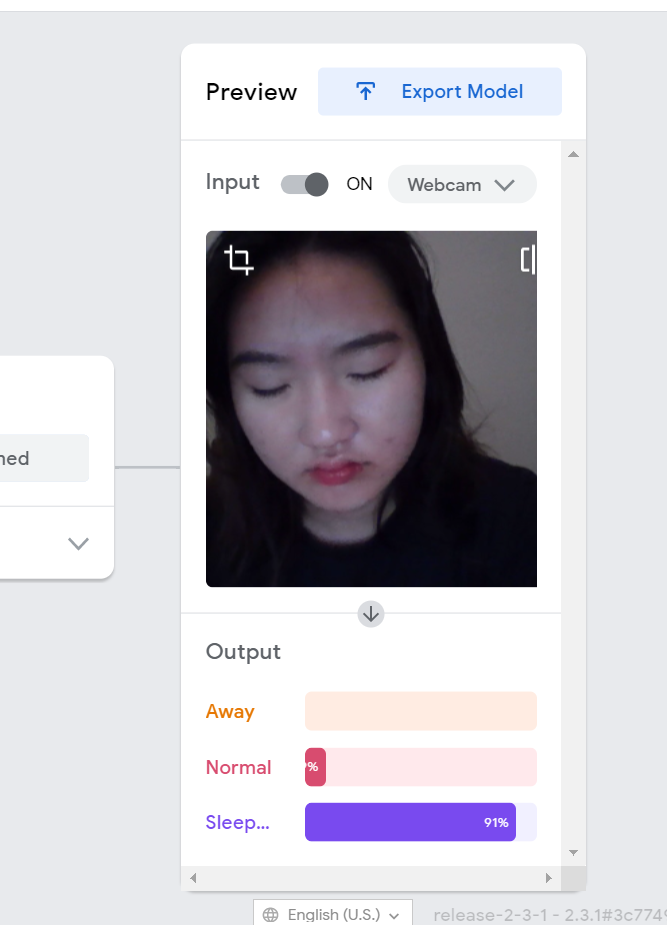

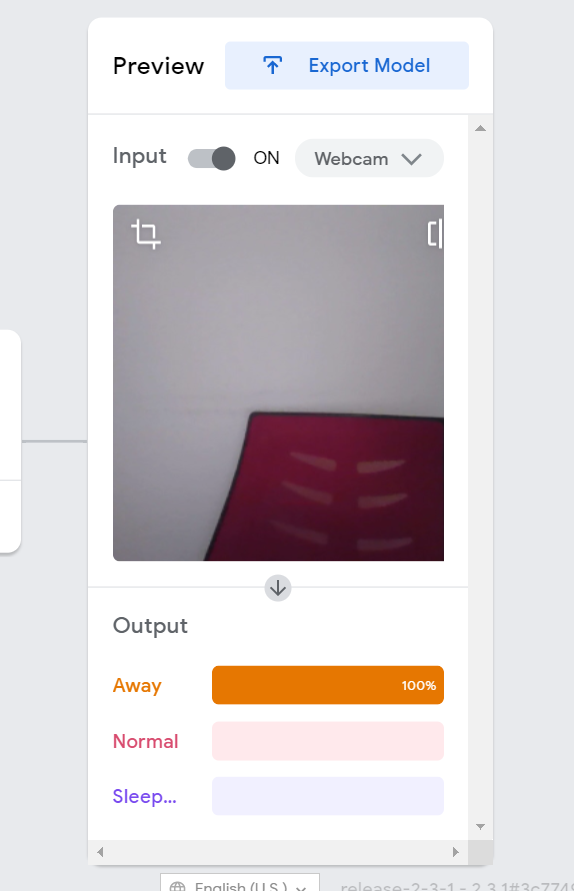

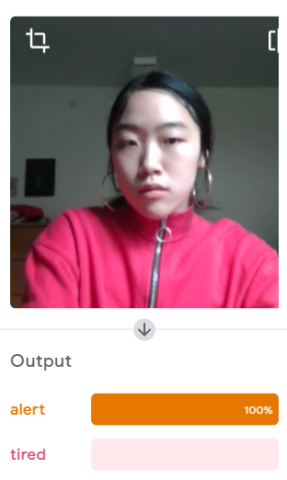

Normal:

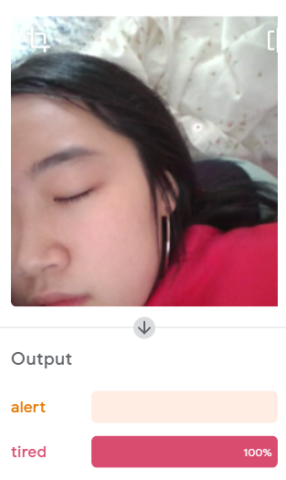

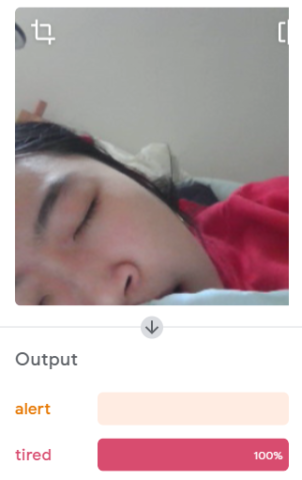

Sleeping:

Away:

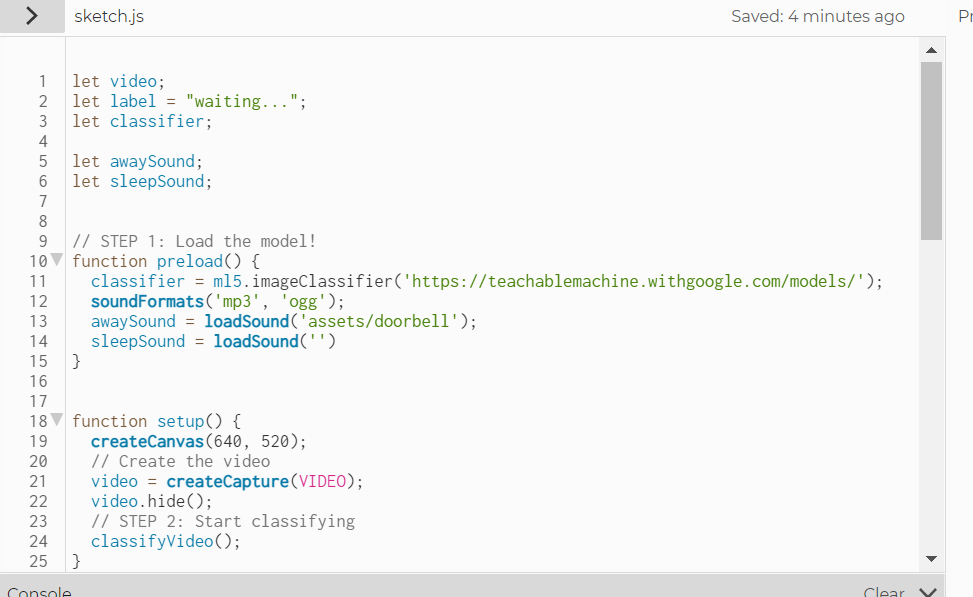

Link to code: https://editor.p5js.org/SayTheYoung/sketches/b8O93YmuD

I did not get to finish the project. However, my idea was to have a program that notices when you are asleep during class and wakes you up with a sound whenever you start dozing off. This was inspired by me always falling asleep during class.

Problems I ran into:

Other than problems in my code, I’ve noticed that it becomes difficult for the machine to delineate between me closing my eyes or looking down and sleeping.

gaomeilan – Image Processor

What will a text to image generator do with abstract language depicting love and pain?

I.

i remember when carmine rivers seeped from my

shins, tracing a route back to the bathtub. once,

i dreamt of bathing in my own bruises. my legs

still bleed whenever i miss you.

II.

who cares about subclavian

and carotid arteries. blood

is blood. everyone knows that

model hearts don’t look

like the real thing, they’re

all too red and stiff; genuine

myocardium is pink, fragile,

too fragile, disgusting, raw.

III.

It was dark. You were faceless. The air was stagnant. I was silent.

I didn’t touch you, but I knew you were warm.

We lay our bodies by the marsh, staring up at the sky,

silence slitting our throats. The darkness shrouds our bodies

like a pall. I wondered if two cadavers could kiss.

fr0g.fartz-SituatedEye

gaomeilan – Situated Eye

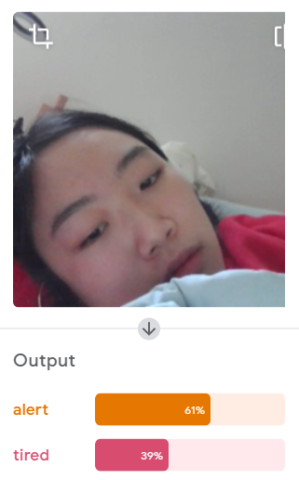

I tried to train the Teachable Machine to recognize when I’m awake/alert versus when I’m sleeping/tired. For awakeness, I recorded clips of me focusing on the camera, usually eyes open, and I also tried to capture more “alert” body language. For tiredness, I recorded clips of me with my eyes closed in bed, yawning, and “tired” body language (like me with my head leaned against other surfaces).

minniebzrg-SituatedEye

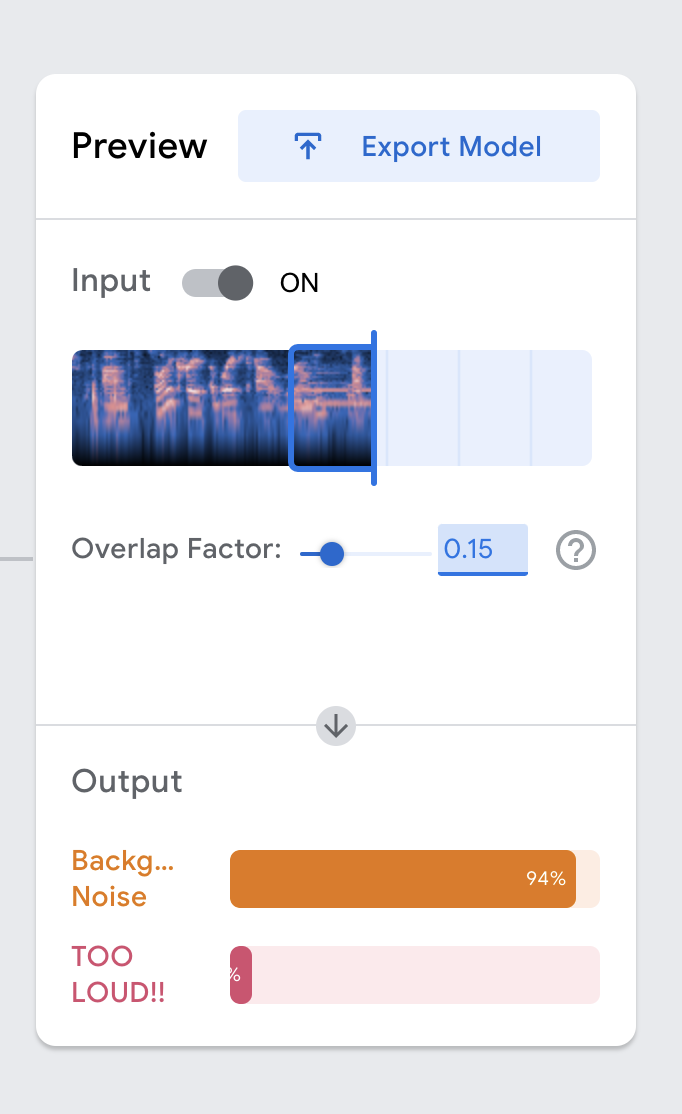

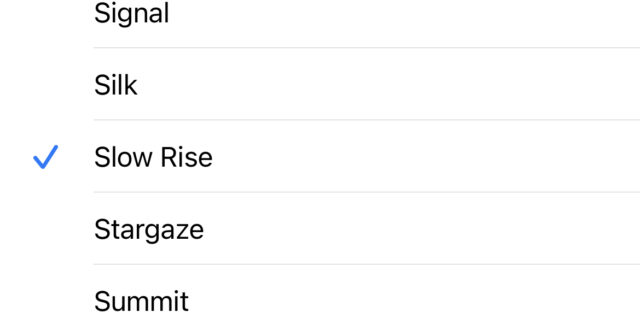

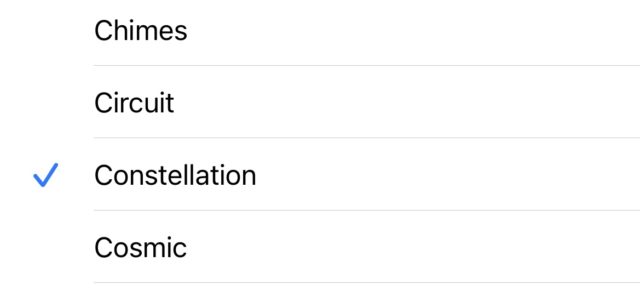

These are the ringtones that my sister, whom I share a room with, annoyingly uses for all of her alarms. I can wake up with ease from my alarms and I even wake up naturally on time. However, my older sister is the opposite. She needs over 10 alarms and she tends to sleep through them all. It’s pretty annoying when you sleep 10 feet away from her. As a result, these two alarm sounds TRIGGER me. When I was playing these to annoy her, she told me to just wake her up when they go off, because she can’t do it herself. So I created this system that alerts me to wake her up when it hears any of these two alarms. I used my iPhone to play the sounds directly into my airpod microphone. The first alarm was clearly distinguishable to teachablemachine but the second alarm, called Slow Rise, was jumping around from all three categories. After evaluation, it seems like Slow Rise sounds too much like Constellation. I might need to insert a different alarm sound or continue to record different audio samples. I think this project could take it a step further with some coding.

These are the ringtones that my sister, whom I share a room with, annoyingly uses for all of her alarms. I can wake up with ease from my alarms and I even wake up naturally on time. However, my older sister is the opposite. She needs over 10 alarms and she tends to sleep through them all. It’s pretty annoying when you sleep 10 feet away from her. As a result, these two alarm sounds TRIGGER me. When I was playing these to annoy her, she told me to just wake her up when they go off, because she can’t do it herself. So I created this system that alerts me to wake her up when it hears any of these two alarms. I used my iPhone to play the sounds directly into my airpod microphone. The first alarm was clearly distinguishable to teachablemachine but the second alarm, called Slow Rise, was jumping around from all three categories. After evaluation, it seems like Slow Rise sounds too much like Constellation. I might need to insert a different alarm sound or continue to record different audio samples. I think this project could take it a step further with some coding.

![[video-to-gif output image]](https://im.ezgif.com/tmp/ezgif-1-2ef3070b9f74.gif)

^gif of how I transferred the ringtones to teachablemachine. (ps. I’m not mad)

![[video-to-gif output image]](https://im.ezgif.com/tmp/ezgif-1-947ab600bcab.gif)

I’m trying to put this project into p5js so I can make a visual alert to wake up my sister.

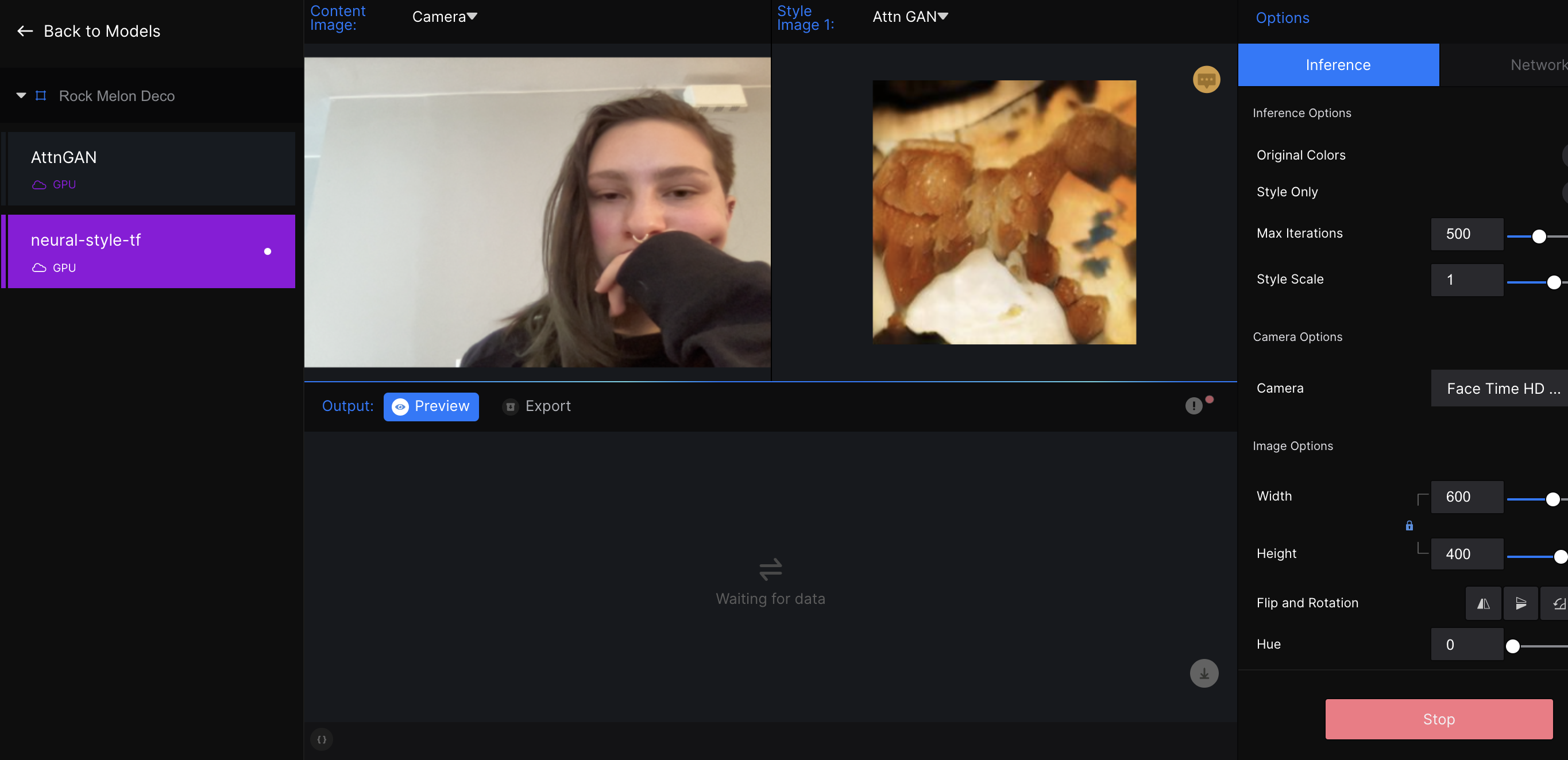

bookooBread-ImageProcessor

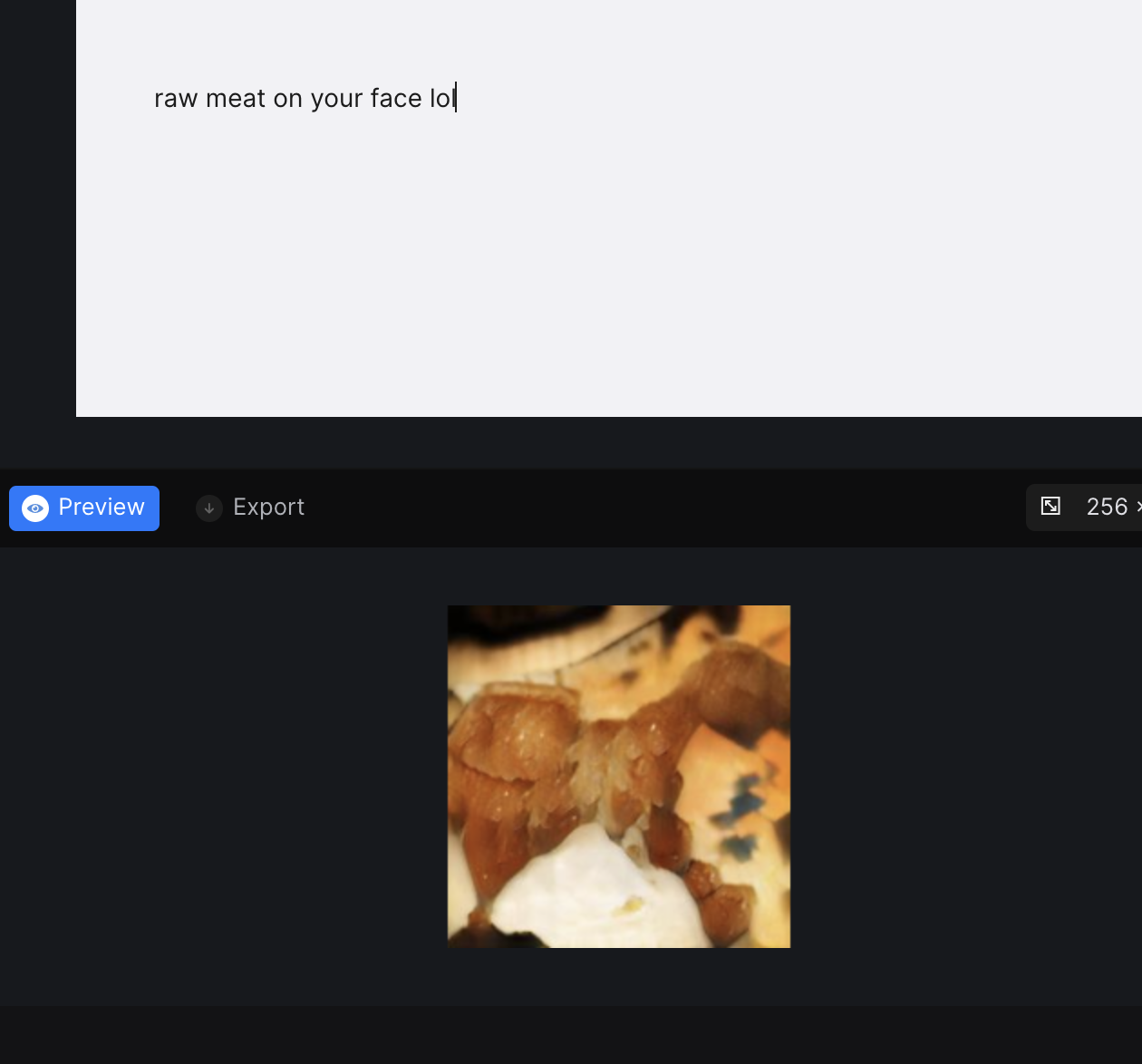

First of all, I spent way too much time on the Situated Eye part of this deliverable so I didn’t have much time for this. I plan on playing with Runway a lot more in the future when I have time.

My idea was to use the text to image generator to create some weird ass visuals… and that sort of worked?

But then, I wanted to connect these images to other models and use them to style other images/video or mix it with a face. None of these worked and I think that’s because I just haven’t spent enough time working with Runway yet so I’m not quite sure how all the tools work. But I will keep at it since it is so so goddamn cool!

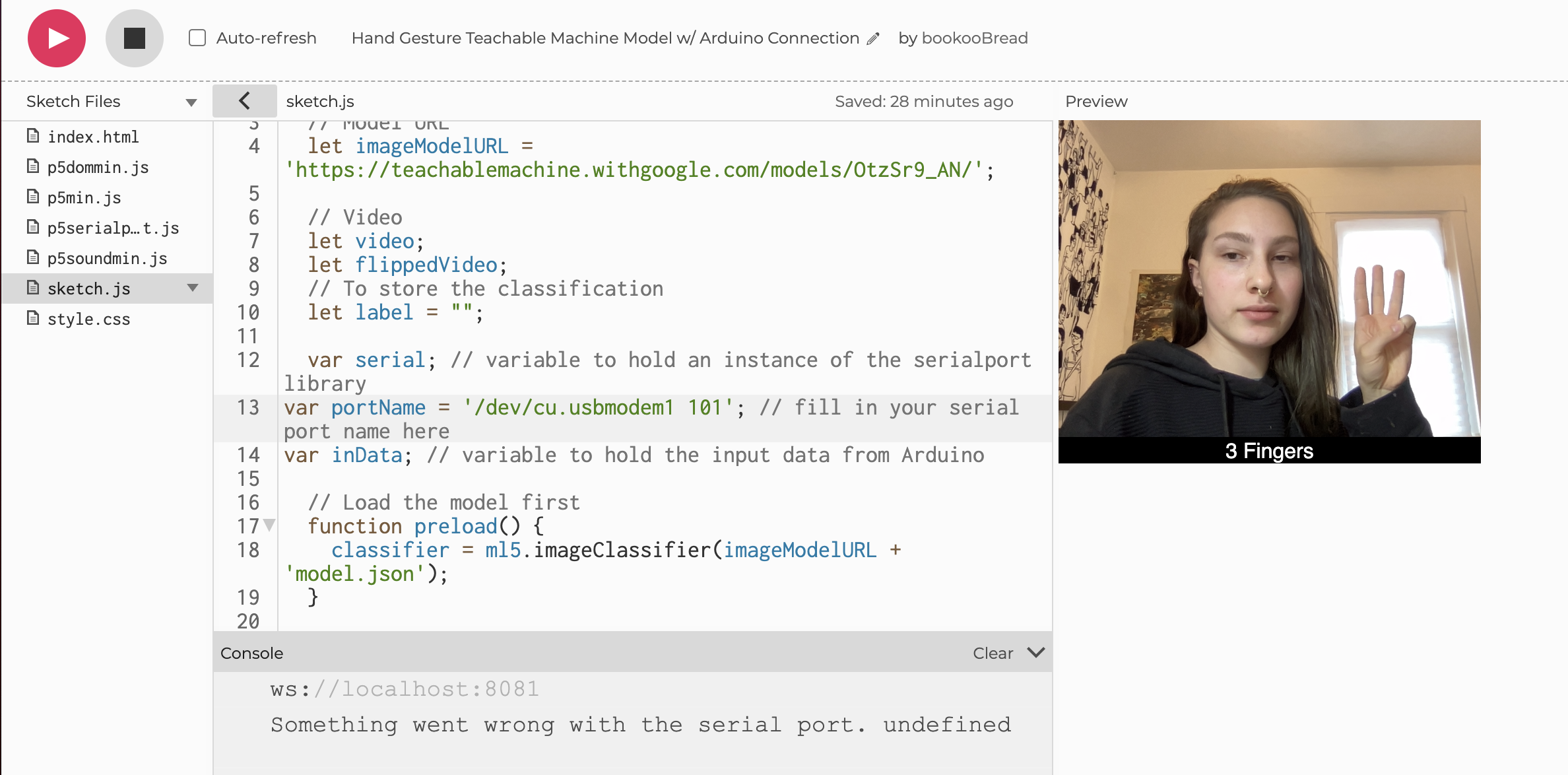

bookooBread-TeachableMachine

Hand Gesture Teachable Machine Model w/ Arduino Connection/Soon-to-be Arduino Kinetic Sculpture

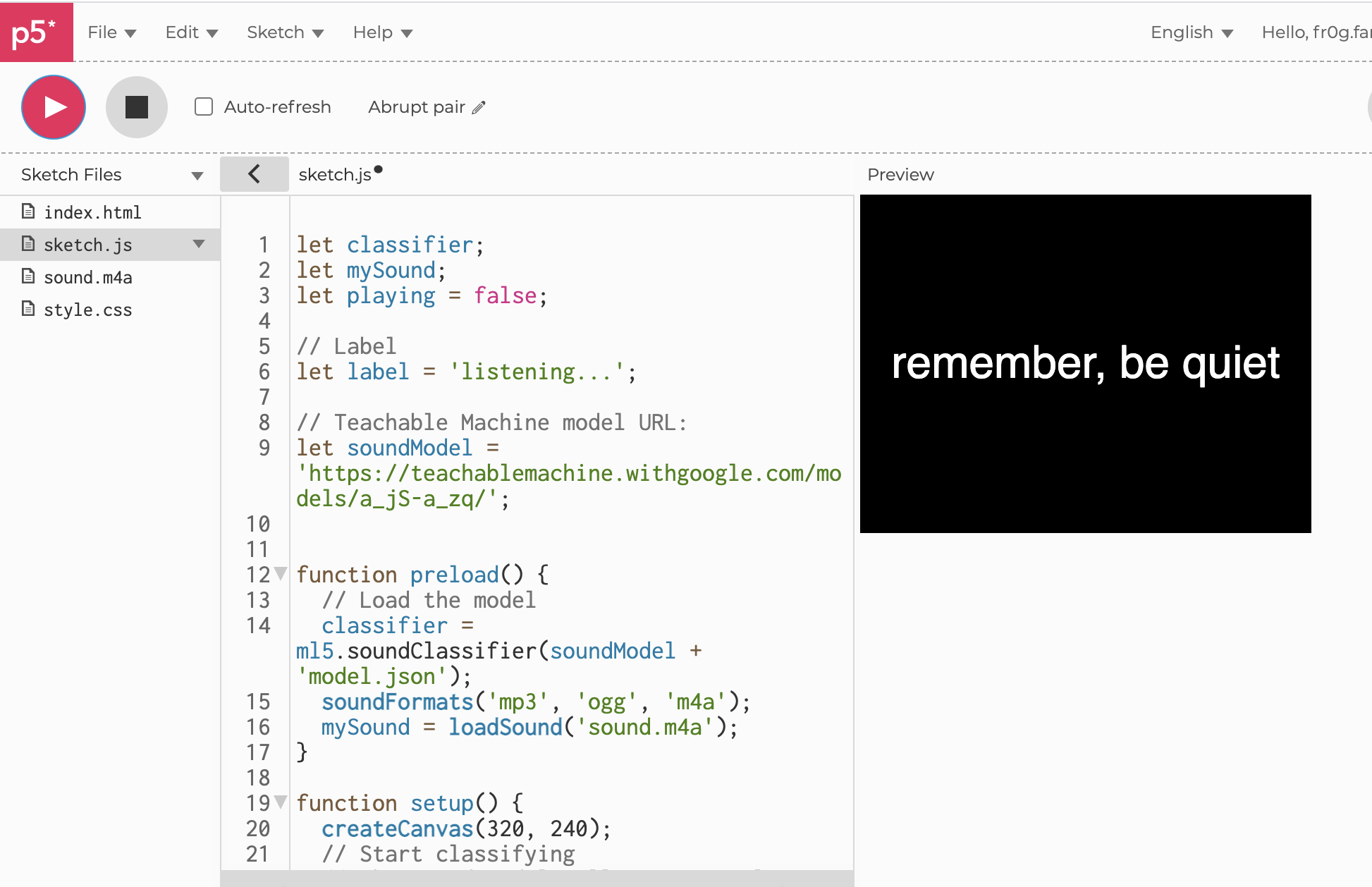

So, I started out doing something kind of boring where I was going to teach a model to recognize when I touch my hair (nervous habit of mine) and make an annoying sound. This hopefully would’ve helped me break that habit. However! I started thinking about a project I had started in my physical computing class where I was going to write a hand tracking/gesture recognition program to control an Arduino kinetic sculpture. I am currently using the openCV library for Processing… but Teachable Machine offers up a different approach. My idea was: if I could use TM as my hand gesture recognition model… this project would probably be much simpler on the programming end and then I could focus more on the visual/sculpture. So… I brought my model into p5.js and researched how to get serial output from there. This is where I found P5.serialport, which is a p5.js library that allows your p5 sketch to communicate with Arduino.

https://github.com/p5-serial/p5.serialport

https://github.com/p5-serial/p5.serialcontrol

Teachable Machine Model Demonstration

So, this model is pretty buggy. Luckily redoing the teachable machine part is fairly simple, so I will continue to test and refine this until it works properly and easily. I also want to try out some other classes that I didn’t include in this demo. I had made a hand waving and finger gun class but it seemed to mess things up a bit, so I disabled them for this demo.

P5.js Code

https://editor.p5js.org/bookooBread/sketches/4MgV6eh-r

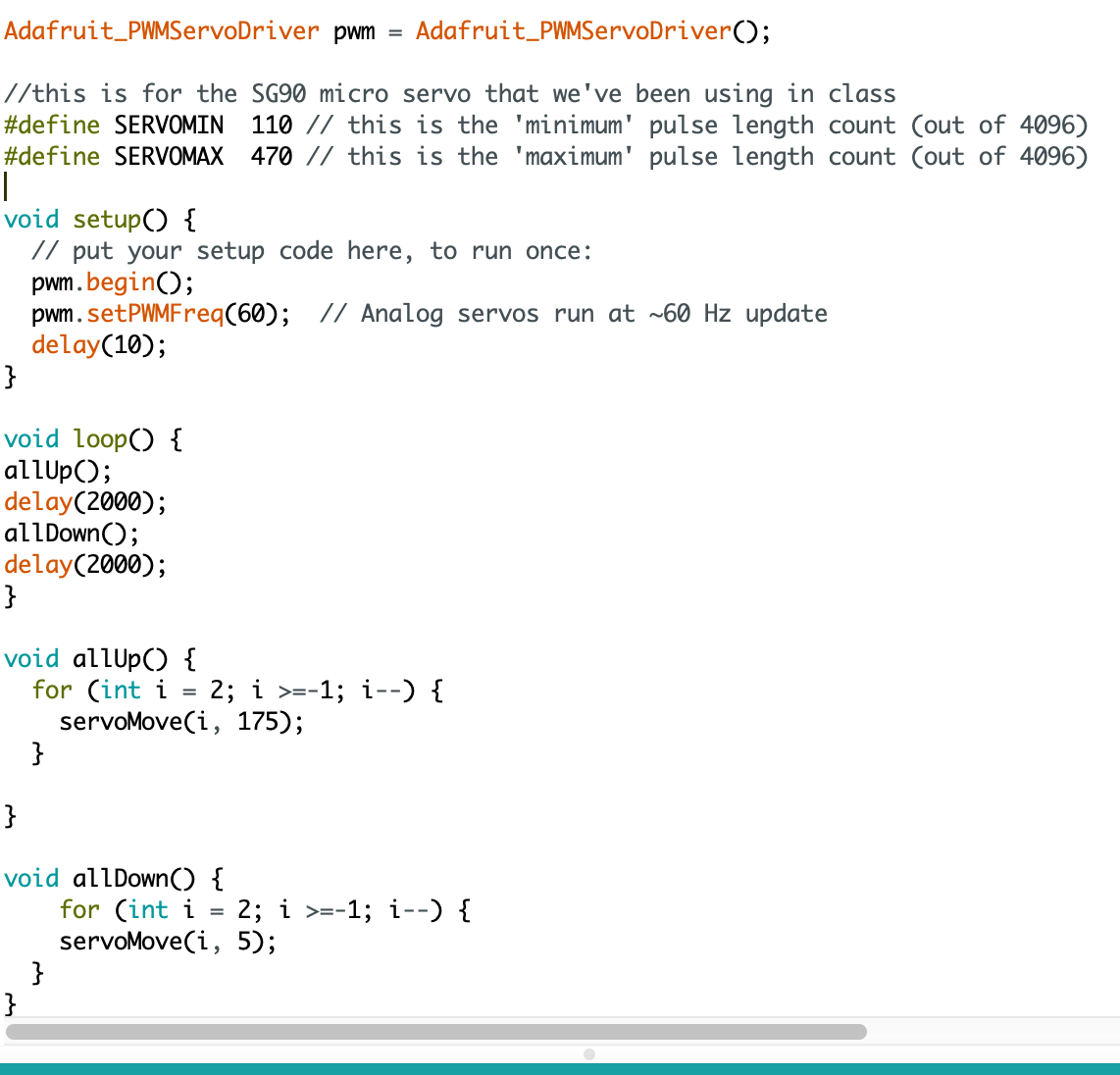

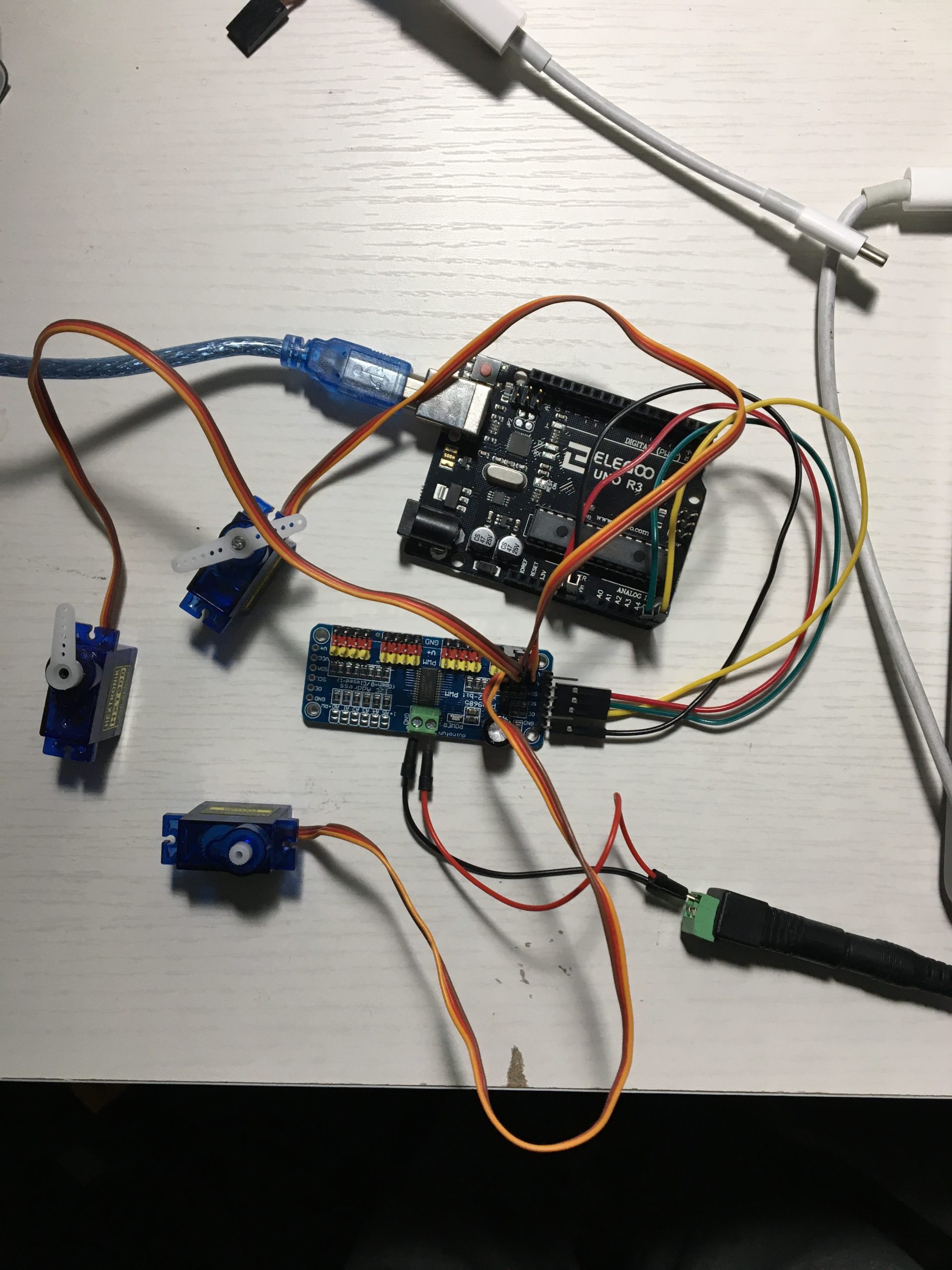

Arduino Code

Arduino Circuit Set Up

I have not been able to work on the actual arduino sculpture much yet. So far, I just have the Arduino controls set up to 3 basic servos so I know things are working and its receiving a connection.

Downloading the P5.serialserver

For P5 to communicate with the Arduino, I need the webSocket server (p5.serialcontrol). Unfortunately, I tried to download the GUI from github but my computer does not recognize it as safe software – so that didn’t work.

Then I tried to run the other option of using it without the GUI and running it from my command line… that also didn’t work…

So basically, I cannot go any further with this project until I figure out how to work around my computer blocking me from running/downloading this app. But! I am optimistic!

Also, I apologize for this extremely long post!

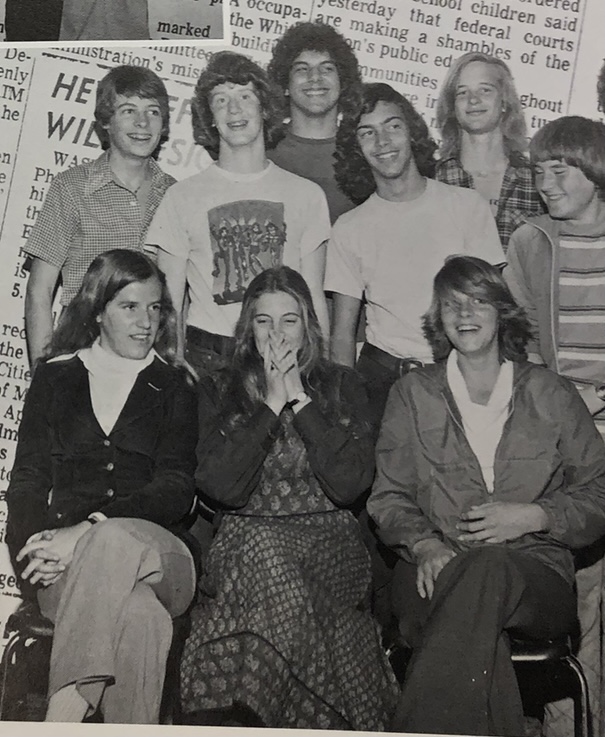

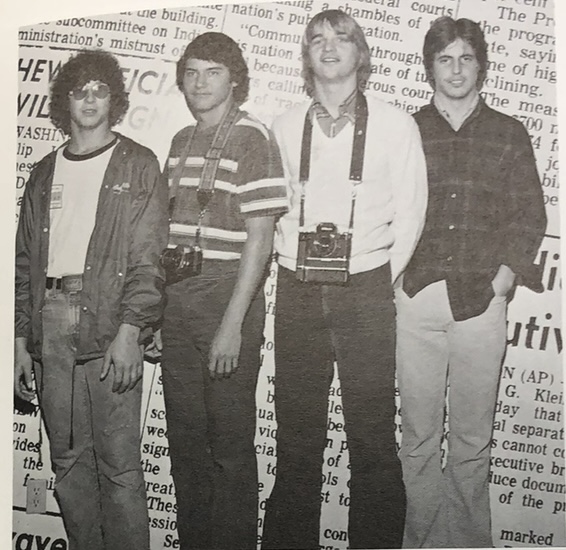

YoungLee – Image Processor

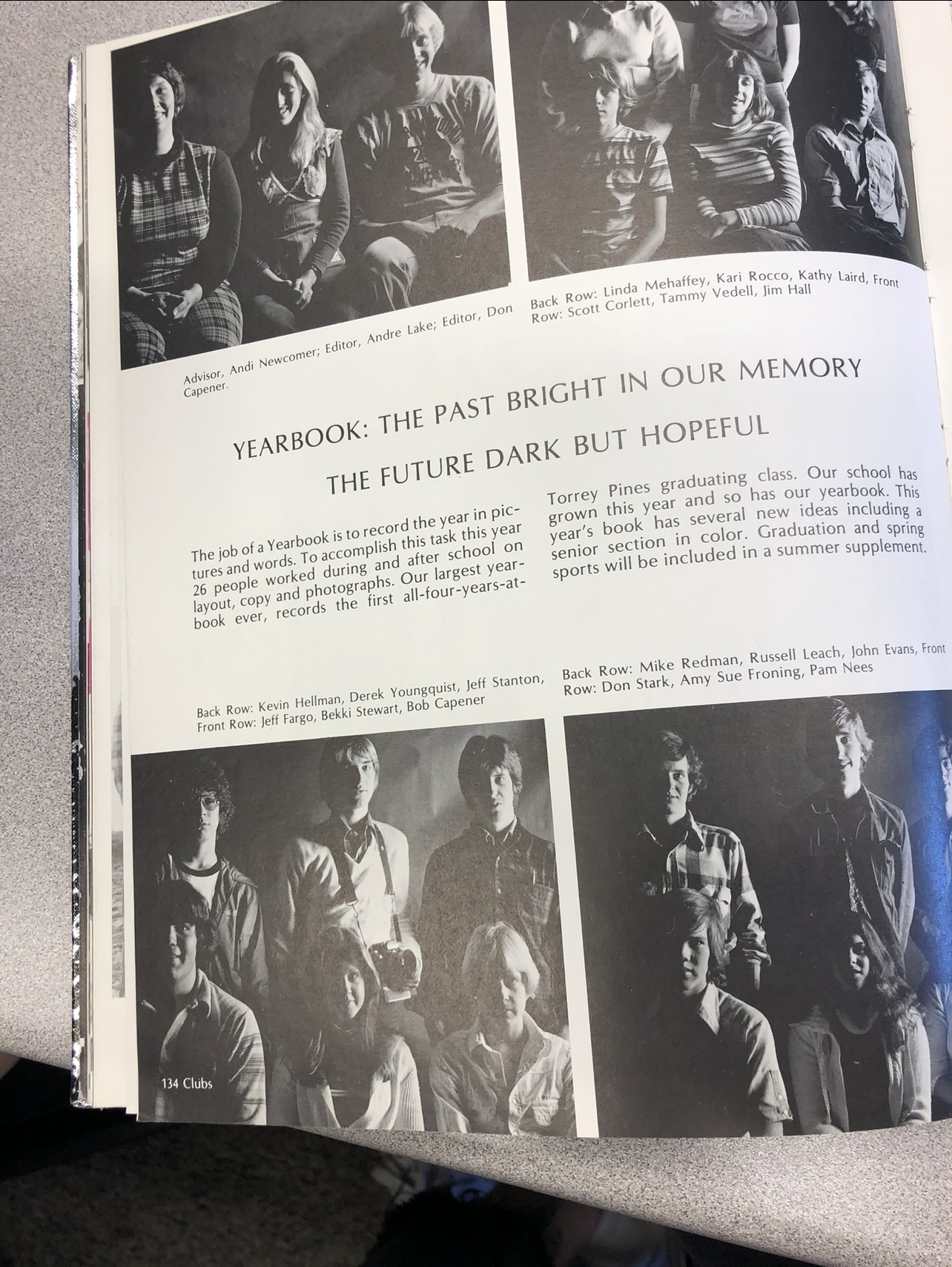

When I was a part of my newspaper class in high school, I came across my high school yearbook from 1978. I remember being fascinated by it and took images of the black and white photos. At the time, the only colorized photos were the senior portraits and nothing else, so using the neural filter tool in photoshop, I colorized the other images. It was really easy to use, and I will definitely use it in the future as well.

Images:

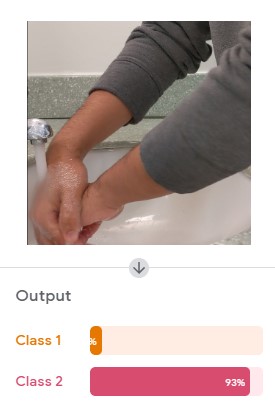

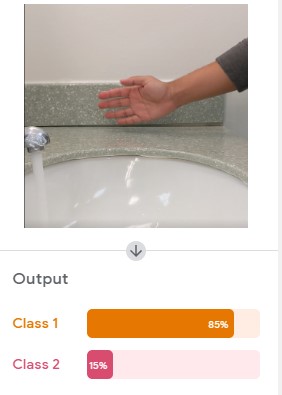

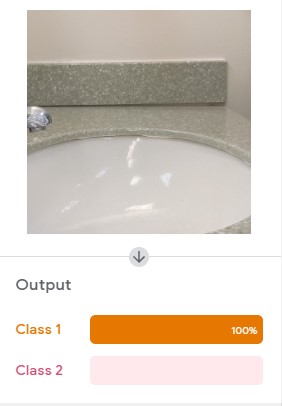

shrugbread- Situated Eye

I created a detector for if I’m washing my hands. I couldn’t get the p5 code to work so I took stills from a video to detect if my hands were in the sink washing my hands or no hands in the shot. I was thinking particularly about this application as an enforcer rather than something on an individual basis to check one’s self. A world where everything even how long and properly we wash our hands…while with good and productive goals and intentions, the thought is still discomforting. Below are the results of how well my detector worked. Class 1 below is not washing and class 2 is washing.